Azure Sentinel Data Export to Azure Storage

How to make AdHoc KQL queries to long term storage logs

There are many ways you can export Azure Sentinel data to longer term Azure Storage accounts as blobs of data. These data log exports can also be stored for very cheap as blobs and can also be recalled in via a KQL query in a AdHoc manner.

One of the more easy and cloud native methods is The Data Export rule in Azure Sentinel, specifically in the log analytics workspace. The architecture looks like this

Let’s walk through setting this up and testing. First create a Azure Storage account with LRS replication.

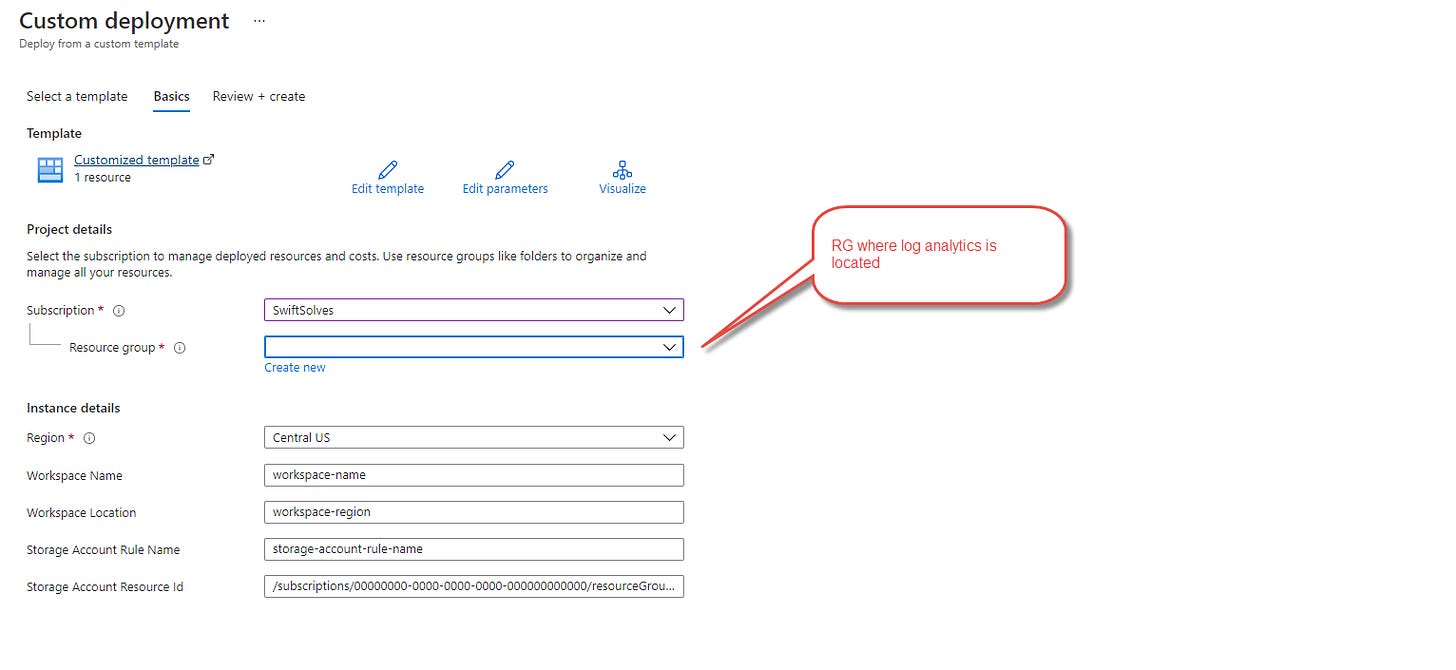

Within Azure Sentinel you can export the data tables very easily using a feature that the underlying Log Analytics workspace has called ‘data export ‘. You can then go to create a resource in the portal and search for ‘Template deployment (deploy using custom templates)‘ Choose Build your own template in the editor.

Copy the ARM Template to create a data export rule to a storage account using template. Paste the template in the editor over the existing code.

Scroll down and update the array of tablesNames that you want to export to the storage account. *Please note that most tables can be exported. At the time of this writing however Custom logs or table names that end in _CL cannot be exported. For a more comprehensive list see Unsuported and Supported tables section.

Click Save and update the fields accordingly and then deploy then export rule. *Note that the Resource Group where Log Analytics is in should be chosen to deploy the rule for proper rule creation.

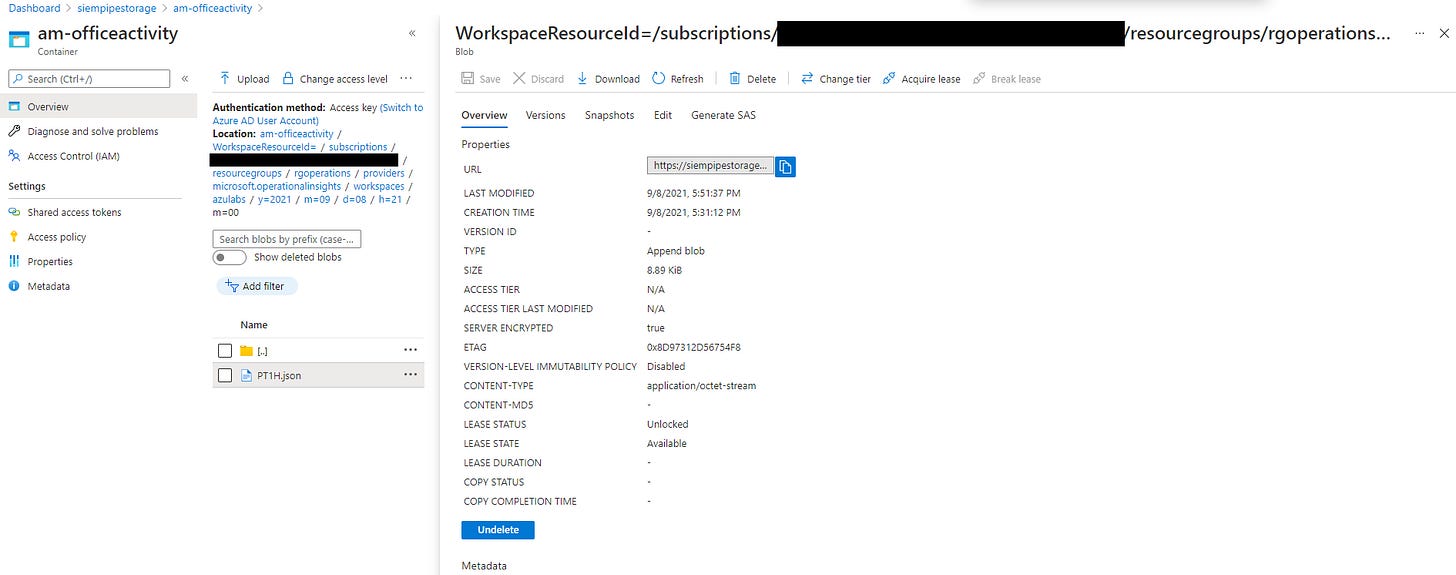

The tables of data exported automatically become containers called am-tablename and have a resource path of:

WorkspaceResourceId=/subscriptions/subscription-id/resourcegroups/<resource-group>/providers/microsoft.operationalinsights/workspaces/<workspace>/y=<four-digit numeric year>/m=<two-digit numeric month>/d=<two-digit numeric day>/h=<two-digit 24-hour clock hour>/m=00/PT1H.json

Now when security, cloud ops, compliance, or legal need to query the data and get certain results or visualize historical data they can use the externaldata( )[ ] kql operator in Azure Sentinel and point it to a file or set of files to query.

*To learn more about the KQL and how to lookup multiple blobs check out the documentation.

The base kql query will look like this where we will need to fill in a few things:

externaldata(INSERT SCHEMA HERE)

[

h@"https://STORAGEACCOUNTNAME.blob.core.windows.net/am-officeactivity/SASSIG"

]

with(format="json")To do this we must first build the schema for the table of information we are looking up. Go to Azure Sentinel and Logs blade and use the following query to generate the schema:

OfficeActivity

| getschema

| extend schemaprint = tostring(strcat(ColumnName,':',ColumnType,', '))

| project schemaprintCopy and paste and cleanup in notepad. *Tip here be careful some schemas generate more than one page of columns as the default view only shows 50 records.

Once cleaned up paste into the ( ) area replacing the INSERT SCHEMA HERE. Your KQL query should now look like this.

externaldata(TenantId:string, Application:string, UserDomain:string, UserAgent:string, RecordType:string, TimeGenerated:datetime, Operation:string, OrganizationId:string, OrganizationId_:string, UserType:string, UserKey:string, OfficeWorkload:string, ResultStatus:string, ResultReasonType:string, OfficeObjectId:string, UserId:string, UserId_:string, ClientIP:string, ClientIP_:string, Scope:string, Site_:string, ItemType:string, EventSource:string, Source_Name:string, MachineDomainInfo:string, MachineId:string, Site_Url:string, Site_Url_:string, SourceRelativeUrl:string, SourceRelativeUrl_:string, SourceFileName:string, SourceFileName_:string, SourceFileExtension:string, DestinationRelativeUrl:string, DestinationFileName:string, DestinationFileExtension:string, UserSharedWith:string, SharingType:string, CustomEvent:string, Event_Data:string, ModifiedObjectResolvedName:string, Parameters:string, ExternalAccess:string, OriginatingServer:string, OrganizationName:string, Logon_Type:string, InternalLogonType:int, MailboxGuid:string, MailboxOwnerUPN:string, MailboxOwnerSid:string, MailboxOwnerMasterAccountSid:string, LogonUserSid:string, LogonUserDisplayName:string, ClientInfoString:string, Client_IPAddress:string, ClientMachineName:string, ClientProcessName:string, ClientVersion:string, Folder:string, CrossMailboxOperations:bool, DestMailboxId:string, DestMailboxOwnerUPN:string, DestMailboxOwnerSid:string, DestMailboxOwnerMasterAccountSid:string, DestFolder:string, Folders:string, AffectedItems:string, Item:string, ModifiedProperties:string, SendAsUserSmtp:string, SendAsUserMailboxGuid:string, SendOnBehalfOfUserSmtp:string, SendonBehalfOfUserMailboxGuid:string, ExtendedProperties:string, Client:string, LoginStatus:int, Actor:string, ActorContextId:string, ActorIpAddress:string, InterSystemsId:string, IntraSystemId:string, SupportTicketId:string, TargetContextId:string, DataCenterSecurityEventType:int, EffectiveOrganization:string, ElevationTime:datetime, ElevationApprover:string, ElevationApprovedTime:datetime, ElevationRequestId:string, ElevationRole:string, ElevationDuration:int, GenericInfo:string, SourceSystem:string, OfficeId:string, SourceRecordId:string, AzureActiveDirectory_EventType:string, AADTarget:string, Start_Time:datetime, OfficeTenantId:string, OfficeTenantId_:string, TargetUserOrGroupName:string, TargetUserOrGroupType:string, MessageId:string, Members:dynamic, TeamName:string, TeamGuid:string, ChannelType:string, ChannelName:string, ChannelGuid:string, ExtraProperties:dynamic, AddOnType:string, AddonName:string, TabType:string, Name:string, OldValue:string, NewValue:string, ItemName:string, ChatThreadId:string, ChatName:string, CommunicationType:string, AADGroupId:string, AddOnGuid:string, AppDistributionMode:string, TargetUserId:string, OperationScope:string, AzureADAppId:string, OperationProperties:dynamic, AppId:string, ClientAppId:string, Type:string, _ResourceId:string)

[

h@"https://STORAGEACCOUNTNAME.blob.core.windows.net/am-officeactivity/SASSIG"

]

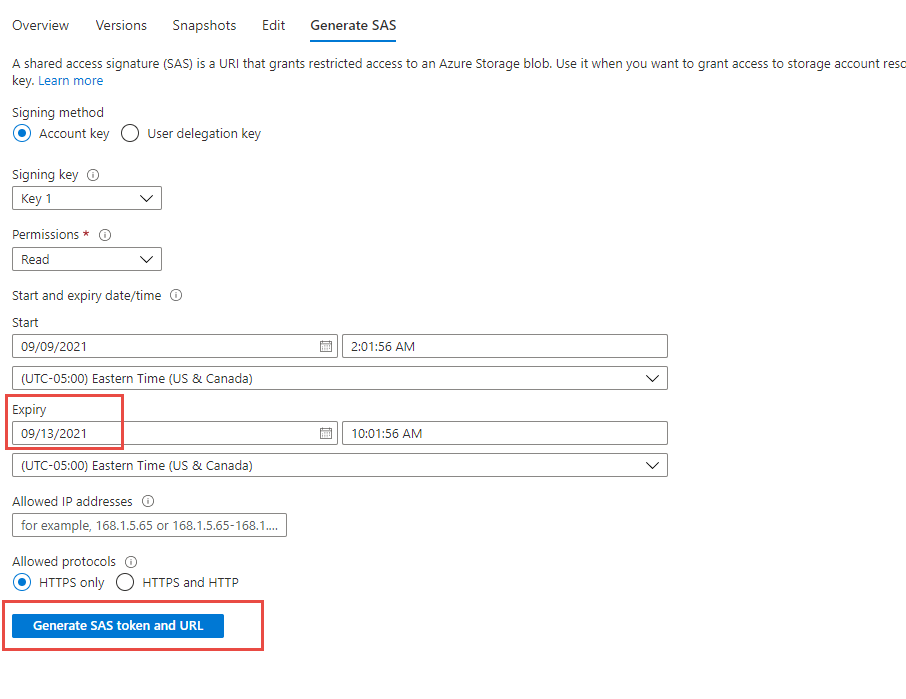

with(format="json")Now we must find the blobs with the date and times we want to query and generate a SAS signature. Using the Azure portal locate a blob of interest and press the Generate SAS tab

Be sure to update the expiration date a few hours or days out in case the team needs to do some extended work and KQLK query against the historical data. Press generate SAS Token and URL

be sure to copy the Blob SAS URL and paste it into the KQL query replacing everything in @h” ”

Your KQL query should look like:

externaldata(TenantId:string, Application:string, UserDomain:string, UserAgent:string, RecordType:string, TimeGenerated:datetime, Operation:string, OrganizationId:string, OrganizationId_:string, UserType:string, UserKey:string, OfficeWorkload:string, ResultStatus:string, ResultReasonType:string, OfficeObjectId:string, UserId:string, UserId_:string, ClientIP:string, ClientIP_:string, Scope:string, Site_:string, ItemType:string, EventSource:string, Source_Name:string, MachineDomainInfo:string, MachineId:string, Site_Url:string, Site_Url_:string, SourceRelativeUrl:string, SourceRelativeUrl_:string, SourceFileName:string, SourceFileName_:string, SourceFileExtension:string, DestinationRelativeUrl:string, DestinationFileName:string, DestinationFileExtension:string, UserSharedWith:string, SharingType:string, CustomEvent:string, Event_Data:string, ModifiedObjectResolvedName:string, Parameters:string, ExternalAccess:string, OriginatingServer:string, OrganizationName:string, Logon_Type:string, InternalLogonType:int, MailboxGuid:string, MailboxOwnerUPN:string, MailboxOwnerSid:string, MailboxOwnerMasterAccountSid:string, LogonUserSid:string, LogonUserDisplayName:string, ClientInfoString:string, Client_IPAddress:string, ClientMachineName:string, ClientProcessName:string, ClientVersion:string, Folder:string, CrossMailboxOperations:bool, DestMailboxId:string, DestMailboxOwnerUPN:string, DestMailboxOwnerSid:string, DestMailboxOwnerMasterAccountSid:string, DestFolder:string, Folders:string, AffectedItems:string, Item:string, ModifiedProperties:string, SendAsUserSmtp:string, SendAsUserMailboxGuid:string, SendOnBehalfOfUserSmtp:string, SendonBehalfOfUserMailboxGuid:string, ExtendedProperties:string, Client:string, LoginStatus:int, Actor:string, ActorContextId:string, ActorIpAddress:string, InterSystemsId:string, IntraSystemId:string, SupportTicketId:string, TargetContextId:string, DataCenterSecurityEventType:int, EffectiveOrganization:string, ElevationTime:datetime, ElevationApprover:string, ElevationApprovedTime:datetime, ElevationRequestId:string, ElevationRole:string, ElevationDuration:int, GenericInfo:string, SourceSystem:string, OfficeId:string, SourceRecordId:string, AzureActiveDirectory_EventType:string, AADTarget:string, Start_Time:datetime, OfficeTenantId:string, OfficeTenantId_:string, TargetUserOrGroupName:string, TargetUserOrGroupType:string, MessageId:string, Members:dynamic, TeamName:string, TeamGuid:string, ChannelType:string, ChannelName:string, ChannelGuid:string, ExtraProperties:dynamic, AddOnType:string, AddonName:string, TabType:string, Name:string, OldValue:string, NewValue:string, ItemName:string, ChatThreadId:string, ChatName:string, CommunicationType:string, AADGroupId:string, AddOnGuid:string, AppDistributionMode:string, TargetUserId:string, OperationScope:string, AzureADAppId:string, OperationProperties:dynamic, AppId:string, ClientAppId:string, Type:string, _ResourceId:string)

[

h@"https://siempipestorage.blob.core.windows.net/am-officeactivity/WorkspaceResourceId%3D/subscriptions/f77542d9-6668-477b-adec-0553061c0811/resourcegroups/rgoperations/providers/microsoft.operationalinsights/workspaces/azulabs/y%3D2021/m%3D09/d%3D08/h%3D21/m%3D00/PT1H.json?sp=r&st=2021-09-09T06:01:56Z&se=2021-09-13T14:01:56Z&spr=https&sv=2020-08-04&sr=b&sig=%2F7%2Bx2N1oz6pWlm5PTslXb9sCyUv8avu6IVUp1UaDeqc%3D"

]

with(format="json")You can now use and execute this KQL query in Azure Sentinel to lookup historical data.

You now have a AdHoc operational procedure and base KQL query you can now use to lookup for compliance or historical searching as needed. I have started a project to capture the base KQL query examples with schema for each data table here: kql/externaldata at main · swiftsolves-msft/kql (github.com)

Future work I want to do and submit to Azure Sentinel GitHub as a Pull Request will include a .ps1 script that asks for table you want to look up, storage account name where archived data rests, and historical date ranges. Once entered in the script will enumerate across the storage account and generate the necessary SAS signatures on the blobs and then generate a updated KQL query to use.